The Nervo board is now available in the InMoov site Shop!!!!! (by the time I published this post, it was already out of stock, but you can backorder)

Nervo board, photo Leon Van der Host

After the Tech Fest in India, one of the first things I had to do was to fix a hardware issue on the InMoov 2 hand. One of the drivers had burned out because the hand stayed powered with the thumb stucked in a wrong position. The main reason was because a wire of the thumb got tangled inside the palm. I had to redesign the paths of that wire and re-print the parts.

A few days ago, I received the Myo Thalmic Armband sent by Yohan of the FabLab Rennes and MHK(Bionico Nicolas Huchet).

It was like receiving a Christmas present.

Searching on the Net, I found a Arduino library that allows the Thalmic to communicate with the Arduino via the computer.

I went on testing it and wrote a script, which first allowed me to use it with the InMoov finger starter (see third video) doing 3 small movements. The advantage of the Thalmic is the ease of use and set up. Once calibrated, you can remove it from your arm and put it back on (in the same position) it automatically works again, no need for re-calibration.

The goal with the Thalmic was to be able to use it with the InMoov 2 hand. Ideally is to set various possible gestures. In fact the InMoov 2 has an onboard Arduino Nano that lets the user create any gesture desired and can be modified by just a plug and play USB connection to any Computer. The Thalmic uses Bluetooth to communicate, and as for today it cannot communicate directly with all Bluetooth devices of your choice, but it will and the plan is to have an onboard Bluetooth Arduino set in the hand to allow direct communication.

I have been able to test the Thalmic also on the bicep muscle, it is more difficult to calibrate but I have been able to reproduce 3 differents set of gestures pretty reliably. Although the arm gestures to activate the sensor have to be rather large, which is a inconvenient if you want only the fingers of the InMoov 2 hand to be triggered.

So this is huge progress!!! In the below video I’m using double taping fingers to « wake up » the Thalmic, but I can also do it without, which lets the hand constantly ready for any muscle activity.

What else happened this month:

Alessandro has borged into MyRobotLab the Leap Motion, it is now very easy to run a InMoov hand or all sort of devices with the Leap Motion.

InMoov does two Make Magazine covers, USA and Germany:

Read the article about the adventure of Nicolas huchet

Read the article about the adventure of Nicolas huchet

Le nouvel âge du « faire » des makers, Les Echos:

Right reserved by InMoov

Imerir University, Institut Méditerranéen d’Etude et de Recherche en Informatique et Robotique.

Open Doors see link

France3 TV:

Time laps of their build:

Aperobo perpignan mensuel passionné de robotique. see link

Meetup Paris Machine learning, I did a small conference over InMoov project to get more people involved at the level of AI searching and deep learning algorithms.

InMoov Explorer with WeVolver, organised by Richards Huskes and Bram Geenen.

The Robots For Good project is seeking Makers from every neck of the woods to 3D print parts for an InMoov robot as a means of getting bed-bound kids at Great Ormond Street Children’s Hospital out of their beds and into the London Zoo, virtually. While hospitalized children wear immersive VR headsets from Oculus Rift, they will, ideally, be able to control an InMoov robot as it explores the London Zoo.

Guardian Article

3dprintingindustry article

3D Printing Magazine article

InMoov Explorer

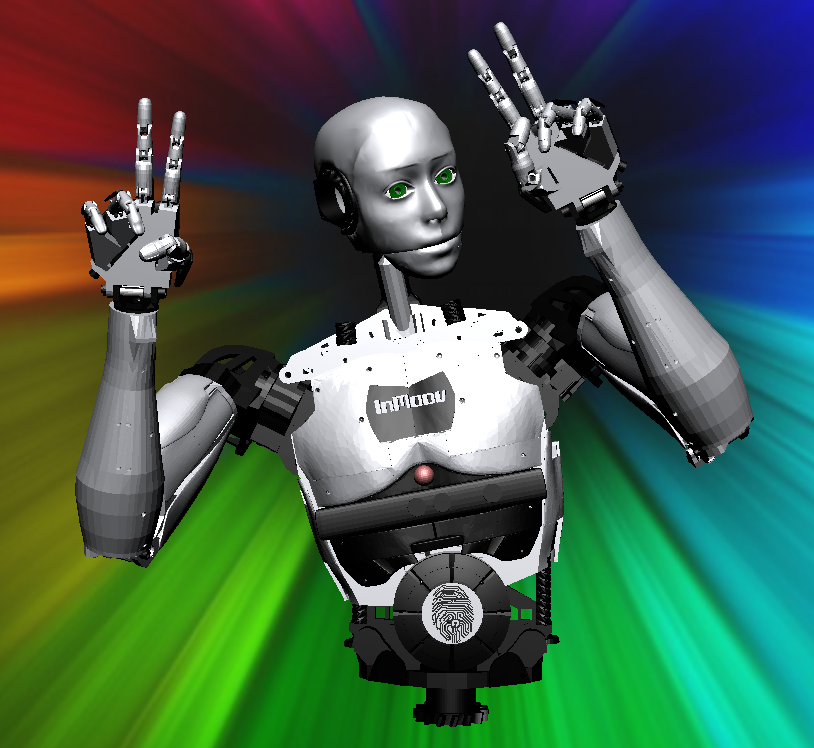

Virtual InMoov is getting rigged by Gareth in Blender and will be very usefull to implement movements into MyRobotLab.

« The Virtual InMoov is ported into the Game Logic of the 3D Blender package.

Its controlled using a Parallax Propeller and PySerial setup.

The Serial data is extracted by the Python code script, blender has a native Python compiler built in so makes software control of 3D objects a synch.

On the hardware side its interfaced with 16 ADC channels each wired to a Potentiometer to control the joints.

Next stage is to link the system up to the Open source MyRobotLab software, this means we could use Kinect and OpenCV routines for interaction. »Says Gareth.

See the Hackaday story.

Virtual InMoov rigged by Gareth in Blender

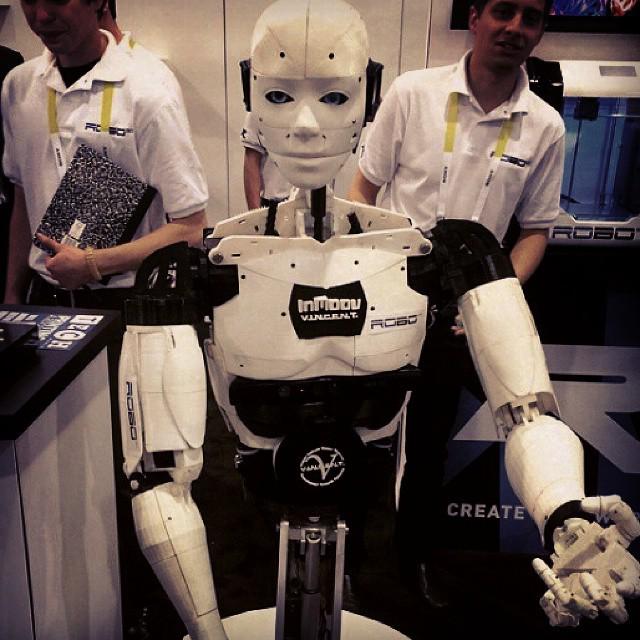

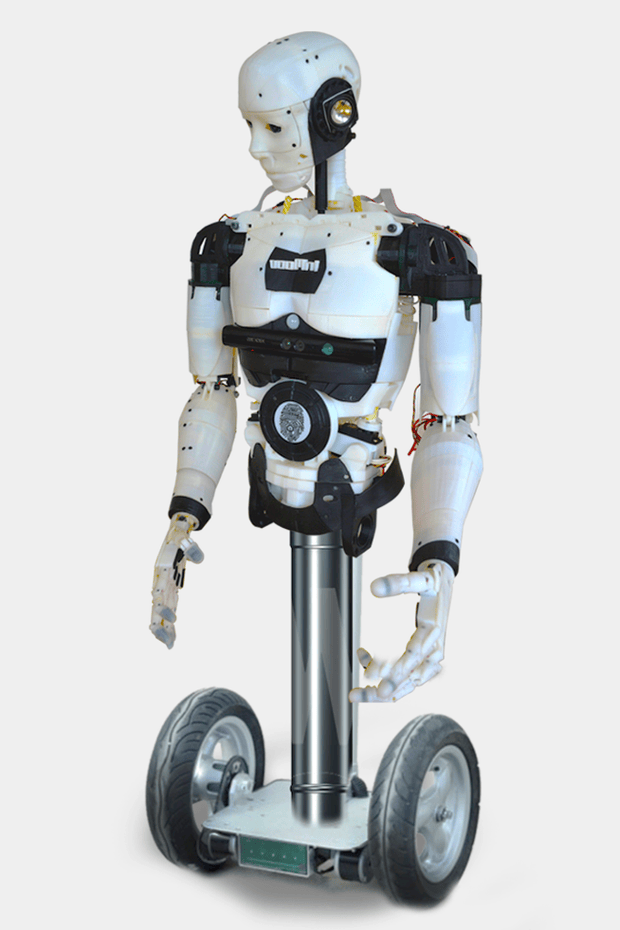

InMoov at CES 2015 on mobile platform: